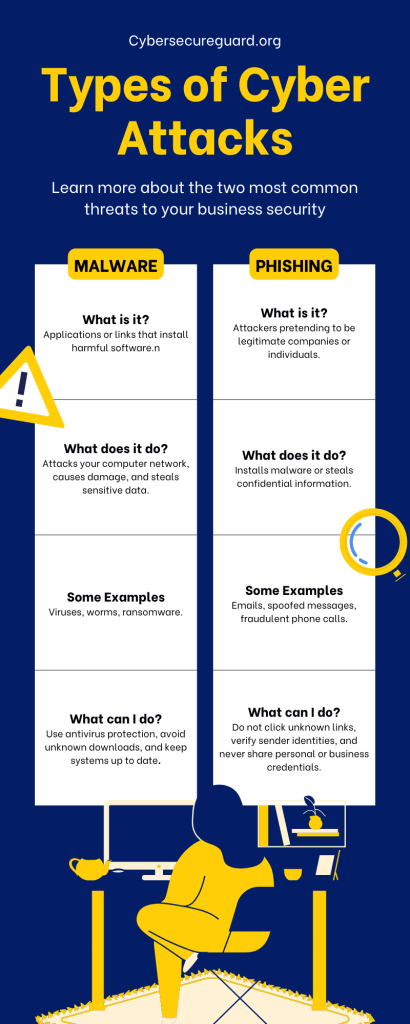

Think of a classic phishing email. Not too hard, right? You’re probably picturing a poorly written message full of spelling mistakes, an odd greeting like “Dear valued customer”, and a suspicious-looking sender such as info@amaz0n-support.com. For years, spotting these scams was almost effortless.

But those days are gone. Cybercriminals have leveled up. With the power of Artificial Intelligence, phishing emails are no longer clumsy or easy to dismiss. Instead, they’re polished, convincing, and often indistinguishable from legitimate business communication. No more obvious typos, no strange grammar—just clean, professional messages that can mimic the style of real companies with frightening accuracy.

This marks a turning point in digital threats. The very same AI technologies that bring us helpful chatbots, real-time translations, and creative tools are now being weaponized by attackers. The result: highly sophisticated phishing campaigns that use psychology, data, and automation to trick even the most cautious recipients.

In this article, we’ll break down why AI-generated phishing emails are harder to detect than ever—and what makes them such a dangerous evolution of an old scam.

1. The Death of Typos: Flawless Language and Grammar

Not too long ago, spotting a phishing email was almost laughably easy. Awkward phrasing, endless typos, and broken grammar were dead giveaways. Lines like “Dear valuedest customer” or “You account has been suspend” practically screamed scam. Many recipients didn’t even need to finish reading—the sloppy language alone was enough to raise red flags.

Today, that safety net is gone. AI-powered language models such as ChatGPT, Google Gemini, or Claude have armed cybercriminals with the ability to produce messages that are grammatically perfect, polished, and highly convincing. These tools don’t just write without errors—they can mirror tone, jargon, and structure with unsettling accuracy.

That means an AI can draft an email in the dry, formal style of a bank, in the casual tone of a coworker, or as an urgent notice from the IT department demanding immediate action. Each version sounds authentic because the AI understands context and adapts its style accordingly.

Even more dangerous: AI isn’t limited to writing generic professional emails. It can mimic the specific communication style of real companies or individuals. If a business tends to use certain phrases, formatting, or even signature lines, AI can replicate them almost perfectly. The result is a message that feels familiar, trustworthy, and far less suspicious to the recipient.

This is where the real danger lies. Our first line of defense—our gut reaction at first glance—has been severely weakened. Where once clumsy wording triggered instant doubt, we’re now faced with polished, natural-sounding text that instinctively feels legitimate. In other words, the cheap, sloppy look of phishing is gone. What remains is a new level of linguistic perfection that conceals the fraud.

2. Hyper-Personalization at Scale

Phishing used to be a numbers game—a blunt “spray and pray” approach. Attackers would blast out thousands of nearly identical, generic emails and simply hope that a small fraction of recipients would take the bait. The majority of people could dismiss these messages easily, because they felt impersonal, vague, and out of context.

Artificial Intelligence has turned this model upside down. Instead of relying on volume alone, AI enables personalization at massive scale. By analyzing vast amounts of publicly available data—LinkedIn profiles, Twitter/X posts, press releases, company websites, or even conference attendee lists—AI systems can generate emails that feel tailor-made for each individual recipient.

Imagine opening an email that:

- Uses your full name correctly, including proper spelling and formatting.

- References a recent corporate event you or your company hosted.

- Mentions a shared connection: “I spoke with [actual colleague’s name], who suggested I reach out to you…”.

- Adopts the industry-specific jargon you use every day in your work.

This level of customization dramatically increases the credibility of the message. It no longer looks like a random scam, but instead like a natural continuation of your real-world interactions. The fact that the information comes from publicly accessible sources makes it even more deceptive—nothing in the email seems “fake” at first glance.

The power of AI lies in its ability to do this not just once, but thousands of times simultaneously. What used to be the labor-intensive work of a skilled social engineer can now be automated. Each recipient gets an email that feels uniquely relevant, even though it’s generated at scale by an algorithm.

And that’s the real threat: when a phishing attempt resonates personally—when it references your job, your network, or your company—it bypasses the natural skepticism we usually apply to unknown senders. The result is a message that feels authentic, timely, and highly convincing, making it far more difficult to identify as fraud.

3. Context Awareness and Believable Narratives

Old-school phishing emails were notoriously clumsy in their setups. The classic “Your account has been suspended, click here to restore access” was often so generic and out of context that most recipients recognized it as fraud immediately. The lack of a credible backstory was actually one of the strongest defenses users had.

AI changes this dynamic completely. Modern phishing campaigns can now generate plausible, multi-layered narratives that feel like a natural part of your workday. Instead of blunt scare tactics, the email comes across as an ordinary continuation of something you’re already involved in.

Consider these examples:

- Subject line: “Follow-up on yesterday’s Teams meeting about Q3 project planning”

- Body text: “Attached you’ll find the revised version of the document we discussed. Please review the updates and send feedback by 4 PM today.”

On the surface, nothing feels unusual. The message references a meeting (a common daily occurrence), attaches a file (perfectly normal in professional communication), and sets a deadline (creating urgency). For a busy employee juggling multiple projects, this doesn’t look suspicious—it looks routine.

The key threat here is contextual awareness. AI doesn’t just write clean sentences; it can embed messages into believable scenarios. Whether it’s referencing project planning, financial reports, HR updates, or IT troubleshooting, the email is crafted to mirror the actual workflows of modern companies.

This makes phishing attempts dramatically harder to spot. Where the lack of context once betrayed a scam, AI simply creates the missing context. It fabricates realistic situations that feel entirely consistent with a recipient’s daily responsibilities. Add in time pressure or a request that seems urgent, and even cautious professionals may click before questioning the authenticity.

In other words: AI has shifted phishing from the realm of “obviously fake” into the world of “perfectly plausible.” And that makes these attacks not only more effective but also more dangerous.

4. The Automation of Deception

In the past, sophisticated phishing required time, effort, and expertise. A skilled attacker had to manually research targets, gather personal or corporate details, craft convincing messages, and send them out one by one. This meant that highly personalized phishing was usually limited to “whale phishing” or spear-phishing campaigns against high-value individuals.

AI has obliterated those limitations. With today’s tools, a cybercriminal no longer needs hours of manual work. Instead, they can simply instruct an AI agent to:

- Scrape data from social media, websites, and news sources.

- Generate thousands of unique, personalized emails tailored to different recipients.

- Embed realistic narratives, deadlines, or attachments for added credibility.

- Send these messages at scale within minutes.

This automation lowers the barrier to entry dramatically. What once required technical skill and patience can now be executed by almost anyone with access to AI tools—even novice criminals. The result is an exponential increase in both the quality and quantity of phishing attempts.

Worse still, automated systems don’t get tired, distracted, or sloppy. They can iterate continuously, refining their messages to avoid spam filters, adapting to responses, and even testing which variations get the highest engagement—much like a marketing campaign, but for fraud.

The outcome is a threat landscape where highly personalized, believable phishing is no longer the exception, but the norm. At scale, this transforms phishing from an occasional annoyance into a relentless, industrialized assault—one that even seasoned professionals may struggle to defend against.

5. Multimodal Threats: More Than Just Text

Phishing has evolved far beyond the email inbox. With the rise of AI, attackers are no longer limited to text—they can now deploy multimodal attacks that combine email, voice, and even visual deception into one seamless fraud campaign.

Here’s how it looks in practice:

- Voice Cloning (Vishing): Imagine receiving a highly convincing phishing email in the morning. Later that day, you get a call from what sounds exactly like your manager’s voice, following up on the request: “Hey, did you see the document I sent you earlier? I need it approved urgently.” With AI-powered voice synthesis, criminals can create realistic clones of familiar voices, making the fraud exponentially harder to detect.

- Fake Websites & Login Pages: AI can instantly generate pixel-perfect replicas of legitimate websites—complete with accurate fonts, logos, and layouts. A link in the email takes you to a login page that looks indistinguishable from the real thing. The second you enter your credentials, they’re in the attacker’s hands.

- Synthetic Media: Beyond emails and calls, AI can produce fake chat messages, realistic images, or even deepfake videos to reinforce the illusion. An email followed by a video message from a “CEO” is far more convincing than text alone.

This convergence of channels creates layered attacks that feel authentic from every angle. Where traditional phishing relied on a single deceptive message, AI-enabled phishing can orchestrate an entire narrative across multiple platforms—email, phone, and web—leaving little room for doubt.

The New Rules of Vigilance

Since the old warning signs (bad grammar, odd phrasing, clumsy design) are disappearing, defending yourself requires a new playbook:

- Distrust Perfection: A flawless, well-written email is no longer a free pass to trust. Be especially cautious when everything looks “too right.”

- Verify the Sender (Closely!): Hover over the sender’s name to check the full address. An email from Microsoft Support will never come from service@microsoft-security.xyz.com.

- Slow Down: Urgency is still the attacker’s favorite weapon. Don’t let artificial deadlines like “within the hour” push you into rushed decisions. Take a breath, then verify.

- Independent Verification: Never click on links inside suspicious emails. If you need to log in to your bank, type the URL manually or use the official app. If the request seems internal, call the colleague directly or confirm in a secure chat.

- Enable Multi-Factor Authentication (MFA): This is your safety net. Even if you accidentally give away your password, MFA makes it nearly impossible for attackers to gain access without the second factor (like a code sent to your phone).

AI has fundamentally changed the phishing landscape. Attacks are now smarter, faster, more personalized, and no longer confined to text alone. But while the tools of cybercriminals evolve, your defense doesn’t have to be complicated. A mix of healthy skepticism, common sense, and proactive safeguards like MFA can go a long way in keeping you safe.

Conclusion: How to Detect AI-Generated Phishing Emails

AI has transformed phishing from sloppy scams into professional, highly convincing attacks. Flawless grammar, hyper-personalization, believable narratives, automation, and even multimodal deception make these emails harder to spot than ever before.

The key to detection lies in a new mindset: don’t trust perfection, always double-check senders, resist urgency, verify requests through independent channels, and protect your accounts with multi-factor authentication.

In a world where AI empowers cybercriminals, the strongest defense is a mix of awareness, skepticism, and proactive security habits. Stay alert, and you’ll stay ahead.

You may also be interested in:

Exposing phishing emails: How to recognize fraud attempts – safely and systematically